Updated 20200117. See below.

Updated 20200308. I might have a path for upgrade success. Maybe.

Did you know Juniper EX switches have PoE firmware updates to be applied?

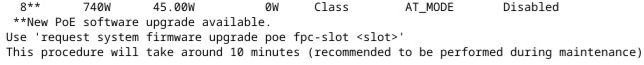

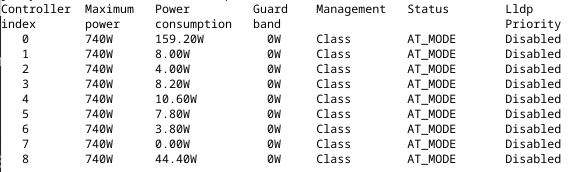

Well, I didn’t until about a year ago when I did an upgrade and was checking on PoE power. Looking at the controller info from show poe controller, I noticed the following:

Huh. Ok. Well, I’ve got a eight unit stack here, and the Juniper EX software upgrade is usually pretty solid, so let’s upgrade it — and it goes off without a hitch.

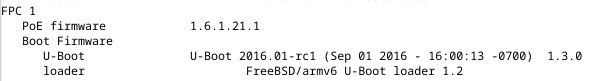

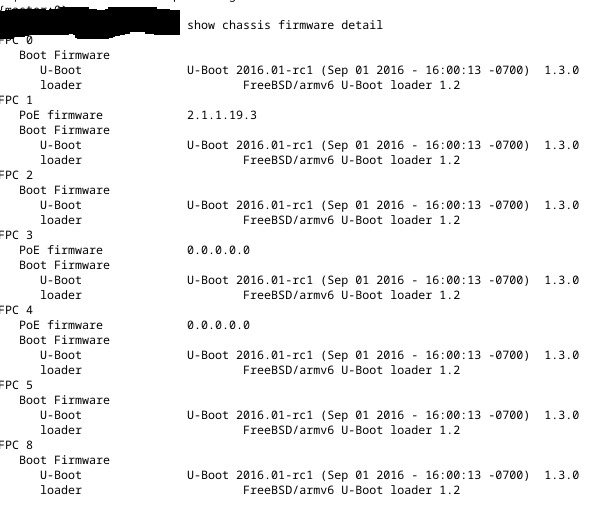

Fast forward nine months later, and I’m running into strange issues with PoE and Mercury door controllers, particularly model ‘MRE62E’. Basically the Juniper switches won’t provide power to this model, but the older MRE52’s had no problem. Checking out the firmware version using show chassis firmware detail, I noticed that the switch had the older 1.x firmware and not the new 2.x.

Alrighty then — let’s upgrade this stack. I upgrade the software using the latest JTAC recommended version (staying in 15.x), then upgrade the PoE firmware — no problem. Door controller is now getting power, I see a MAC address. Everything is hunky dory.

Now let’s upgrade this other stack.

No problems on EX software upgrade. Great. Now upgrade PoE firmware…

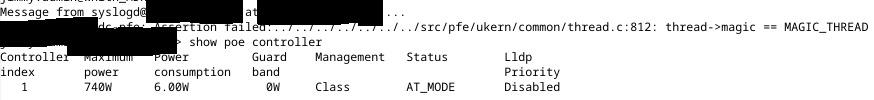

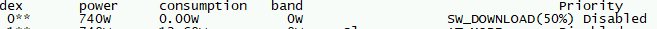

Ten minutes later, I get the following on the terminal:

Of note, and the thing that made me panic, was that out of nine switches in the stack, only one came back online. Checking the firmware versions, I see the following:

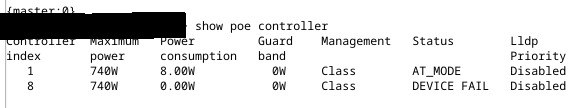

Okay… F***. Well, let’s reboot the stack; perhaps a reboot is needed*. After reboot, I get the following:

In the past when I’ve done a PoE firmware upgrade (between now and when I first learned about it), I had no recourse but to RMA the switch. Well in this case, I don’t have eight spare switches to fill this temporarily while I wait for an RMA! WTF am I going to do?!

Solving the PoE Firmware Upgrade Failure

If you’re in the same situation as I was in, take a deep breath — you’re not dead in the water.

There are two three scenarios for a PoE firmware upgrade failure that I’ve encountered, and I have a solution for both:

- PoE Firmware Failure #1 – After firmware upgrade, you see a mixed result of firmware versions, some being

0.0.0.0.0, some being correct (2.1.1.19.3**), and some missing/blank (see picture above showing mixed/missing versions) - PoE Firmware Failure #2 – Perhaps you did as I did and rebooted and the PoE controller shows one with the message

DEVICE_FAILED(see above) - PoE Firmware Failure #3 – #2 option doesn’t work and nothing you do is getting the PoE controller to upgrade. You may also have the process hang during the download, or if the controller is still at

DEVICE_FAILEDand you try to upgrade, you get a messageUpgrade in progress, even after a reboot.

In all these solutions, here are some tips/info about the Poe upgrade procedure until Juniper fixes the process for upgrading them all at once:

- Upgrade one at a time.

Solution for PoE Firmware Failure #1

If you encounter this failure, DON’T REBOOT THE STACK. You’ll make your life harder if you do.

Next, Juniper TAC (finally) has a solution — and it requires remote/on-site hands. If you’re going on-site or working with someone remotely, get yourself a cup of coffee (or beverage of choice) and some podcasts lined-up, because you’re going to be doing this awhile (~10 minutes for each switch/fpc).

From their site, the solution is the following (with my own notes):

- Power cycle the affected FPC (re-seat the power cord). Do not perform a soft reboot.

- After the FPC joins the VC or the standalone device reboots, execute one of the following commands in operational mode:

request system firmware upgrade poe fpc-slot <slot>

or

Note: This is the method I used

request system firmware upgrade poe fpc-slot 1 file /usr/libdata/poe_latest.s19

JTAC Note: You need to change the fpc-slot number accordingly. Also, it is recommended that you push the PoE code one by one instead of adding all members in the virtual-chassis setup. (Emphasis mine) - After the above command is executed, the FPC should automatically reboot. If not, reboot from the Command Line Interface.

Note: Be patient and wait. No, seriously…wait. It takes awhile. If you need to reboot, you’re rebooting the whole unit AFAIK:

request system reboot - After the FPC is online, check the PoE version with the

show chassis firmware detailcommand. The PoE version should be the latest version (2.1.1.19.3) after the above steps are completed. - If the version is correct, the PoE devices should work.

- Repeat the above steps to upgrade the PoE versions on other FPCs in the virtual-chassis setup.

The one thing to note that when it’s doing its upgrade is that you can see the progress with show poe controller, but at some point it will hang at 95%, then disappear, then come back, then the process will be complete — in other words…WAIT, unless you want to try out the solution for failure #2. 😆

Solution for PoE Firmware Failure #2

In this scenario, you rebooted the stack and something failed. The following is similar to solution #1, but the failed PoE controller requires to basically upgrade it twice. The steps:

- Execute the following command to reload the firmware on the FPC:

request system firmware upgrade poe fpc-slot 1 file /usr/libdata/poe_latest.s19

Note: You need to change the fpc-slot number accordingly. - The PoE controller will disappear when you run

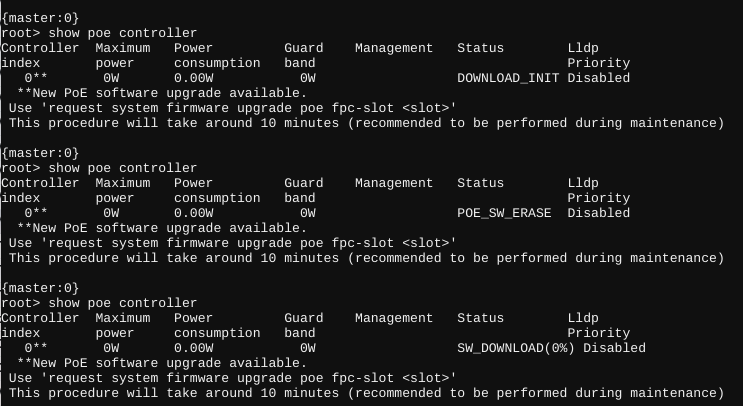

show poe controller, then come back and start upgrading like this:

- After the firmware upgrade completes, the firmware will likely be incorrect (it always was for me). Power cycle the affected FPC (re-seat the power cord). Do not perform a soft reboot.

- After the FPC joins the VC or the standalone device reboots, execute one of the following commands in operational mode:

request system firmware upgrade poe fpc-slot 1 file /usr/libdata/poe_latest.s19

JTAC Note: You need to change the fpc-slot number accordingly. Also, it is recommended that you push the PoE code one by one instead of adding all members in the virtual-chassis setup. (Emphasis mine) - After the above command is executed, the FPC should automatically reboot. If not, reboot from the Command Line Interface.

Note: Be patient and wait. No, seriously…wait. It takes awhile. If you need to reboot, you’re rebooting the whole unit AFAIK:request system reboot

- After the FPC is online, check the PoE version with the

show chassis firmware detailcommand. The PoE version should be the latest version (2.1.1.19.3) after the above steps are completed. - If the version is correct, the PoE devices should look like this:

- Repeat the above steps to upgrade the PoE versions on other FPCs in the virtual-chassis setup.

Just like solution #1, one thing to note is that when it’s doing its upgrade you can see the progress with show poe controller, but at some point it will hang at 95%, then disappear, then come back, then the process will be complete — in other words…WAIT! You don’t really want to re-apply this whole process, do you?

Solution for PoE Firmware Failure #3 (Update 20200117)

I recently had some more issues, and solution #2 just wasn’t doing the trick, so I offer solution #3, which I’ve had success with but there’s a caveat/rabbit hole that may come of it. This is the nuke-from-orbit approach on the switch if you want to avoid doing an RMA (or if you have no choice).

The gist of it: disconnect the switch from the VC (if connected), perform an OAM recovery, zeroize and reboot the switch, then perform the firmware upgrade.

From my experience, there are a few different scenarios that you’ll encounter when you need to use this method:

- During the firmware upgrade, the process just hangs/stalls. You’ll run

show poe controllerand at some point the download hangs/stalls like this:

- You receive a

DEVICE_FAILfor any reason and nothing is resolving it, like this:

- You’re switch is stuck at upgrading the firmware. No matter what you run, the switch displays the following message:

Upgrade in progress. In this scenario, the switch just thinks it’s still in the process of upgrading, but no matter how long you wait (or if you can’t wait some indefinite period of time for it to upgrade), the switch won’t upgrade the firmware.

What we need to do at this point is just get the switch to fresh state so that we can upgrade the PoE controller; and believe it or not, this is actually one of the awesome things about Juniper equipment: when one component of the switch is hosed, the entire switch isn’t hosed and can still function normally. For instance, I have had a switch have a failed PoE controller, but the switch still operated like a non-PoE switch without issue; i.e., Juniper allows for components to be recoverable.

Here’s the solution I came up with:

- Step 1: Zeroize the switch:

request system zeroize

In this step, we’re just starting fresh and clearing out the configuration, which takes about 10 minutes and then reboots. If the switch still thinks there’s an upgrade in progress for the PoE controller, we’re clearing it out. It’s possible that this may fail due to storage issues. If that’s the case go to the next step, otherwise skip to bullet #3. - If step 1 fails: Perform an OAM recovery:

request system recover oam-volume

This is an optional step, and I’ve had to do this when zeroize would fail. If step #1 happens, try this first. takes about 10 minutes as it copies the OAM partition then compresses it for the Junos volume.

Caveat: EX3400s, even in 18.2 land, still have storage issues sometimes. I have one switch that couldn’t recover from oam-volume, and I’m not sure why. I’ll update this once I have a solution. - After the switch reboots, the controller will still come up as failed when you run

show poe controller. Go ahead and run the upgrade again:

request system firmware upgrade poe fpc-slot 1 file /usr/libdata/poe_latest.s19

It should behave like this after running the command:

- The switch should behave normally at this point, upgrading normally. If it doesn’t then you’ll likely need to replace the switch (or live without PoE).

And reminder, just like solution #1 and #2, one thing to note is that when it’s doing its upgrade you can see the progress with show poe controller, but at some point it will hang at 95%, then disappear, then come back, then the process will be complete — in other words…WAIT! You don’t really want to re-apply this whole process, do you?

Final Thoughts

Here’s the kicker for me: I’ve had this work just fine for stacks and single switches alone, and fail on stacks and single switches alone — I can’t find the common denominator here. Perhaps there’s a hardware build that has this more than others, but I can’t figure it out. The official documentation doesn’t hint on a best practice for this (other than maintenance hours), so I’m uncertain on the best approach.

(Update) Juniper does have an official bug report for this, and is apparently fixed in 15.1X53-D592, but I had the issue on 18.2R3, so I’m not convinced it isn’t resolved yet.

Here’s some ideas I have to change my PoE firmware upgrade procedure (unsure if this will help):

Turning off PoE on all interfacesUpgrading one at a time.Trying an earlier version of the JTAC software, the going to the latest recommended. Example: I had no problems with 15.1X53-D59.4 or 15.1X53-D590, but the sample size for determining that is small (only two stacks attempted).- Update: I can’t find any rhyme or reason, TBH. I’ve had it fail multiple ways, so not sure the above will help.

- Update 2: I have had some success with the following (but I don’t feel that confident about it yet):

- Use the 18.2 branch

- Upgrade one at a time

- Waiting for a period of time after a software upgrade and reboot. Don’t get upgrade-happy. Give the hardware some time to get back up and going.

- Cross your fingers. And legs. On a full moon.

- Update 2: If you have a controller showing

DEVICE FAIL, I’ve had success fixing it just by running:

request system firmware upgrade poe fpc-slot 1 file /usr/libdata/poe_latest.s19(change fpc-slot # accordingly)

Time will tell.

Hope this helps! If it doesn’t I’d love to know the different experiences others have. Please share if you’ve had success or failures with any of this!

* I swear I saw a message that a reboot is required, but I can’t confirm this (I didn’t screencap it)

** There is a version 3.4.8.0.26, but that’s on the 18.x software version line, and it requires a whole different set of upgrade procedures. This is outside the scope of this post.